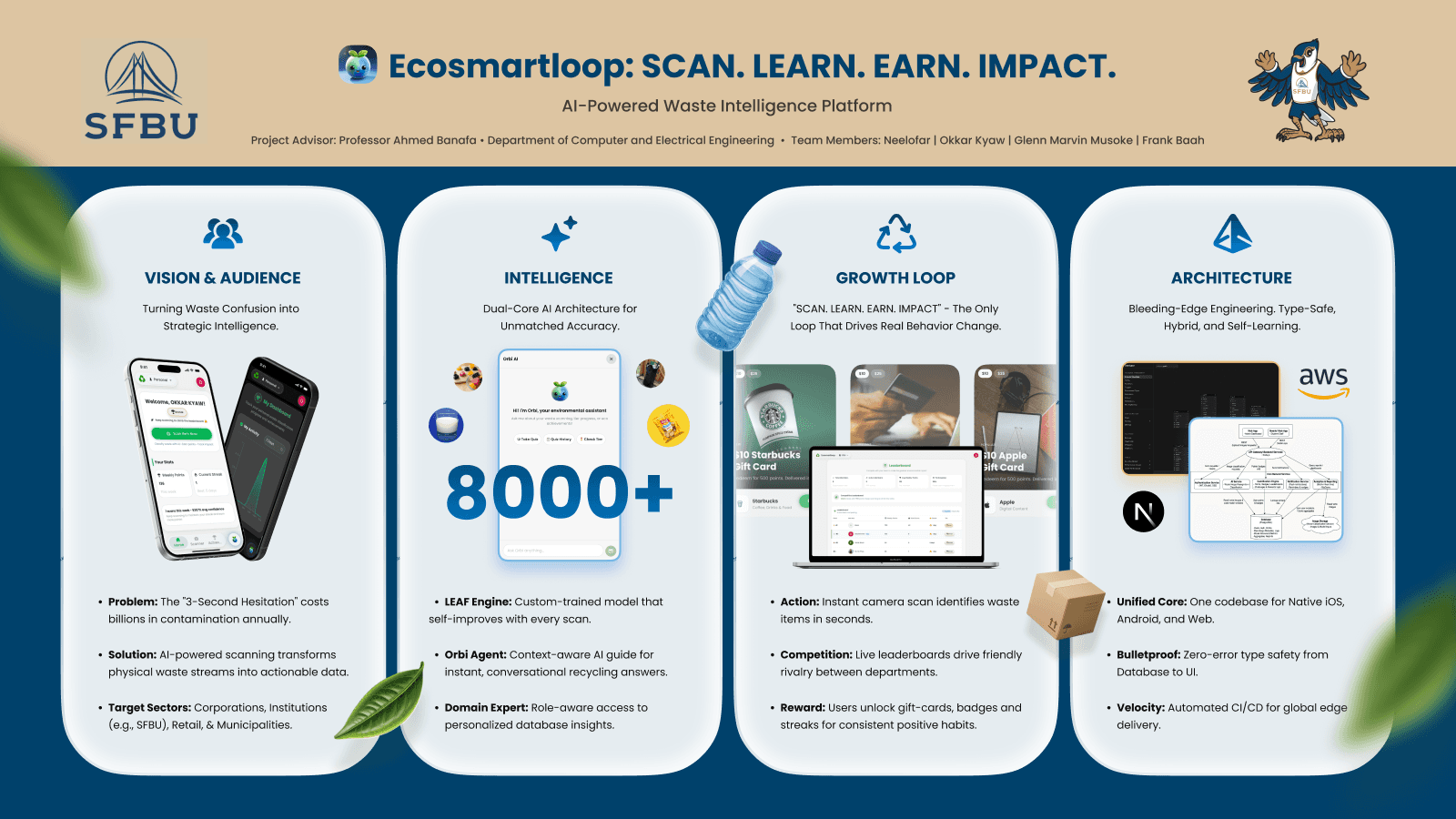

How We Built an AI Waste Classification to solve $640 Billion Problem + California SB 1383 Compliance

January 27, 2026

Steve Oak (Okkar Kyaw)

Prefer Audio Experience? Listen below 👇

$640 billion in waste by 2050. Not from big mistakes. From small ones that compound.

You've stood in front of three bins. Trash. Recycling. Compost. You don't know which one. You guess. 8 out of 10 times, people get it wrong. Multiply that by every business, every day, for decades.

California's SB 1383 mandates organic waste diversion, but over 500,000 businesses face fines they don't see coming. Not because they're careless. Because it's genuinely confusing. The "3-second hesitation" in front of the bins costs businesses thousands in penalties and contamination fees.

We had a few questions going in:

How do you make waste sorting engaging for people who have a million other things to worry about? Everyone cares about the environment in theory, but nobody wants to spend extra brain energy on it when they've got actual work to do.

How do you make AI actually useful instead of just a ChatGPT embed with a green theme? We wanted an AI that's a domain expert in recycling, one that can do research and explain its reasoning, not just spit out generic responses.

How do you ship to iOS, Android, and web without maintaining three codebases?

How do you make this viable as a startup, not just a capstone project? Even if the app works for users, it needs to be appealing for founders and investors if we ever want to scale it.

Vibe coding with AI is genuinely useful for moving fast. But we set a quality bar early: this project needed to go beyond what vibe coding typically produces. Production quality, not just a demo that works on the home screen. We wanted it to hold up when actual organizations threw edge cases at it.

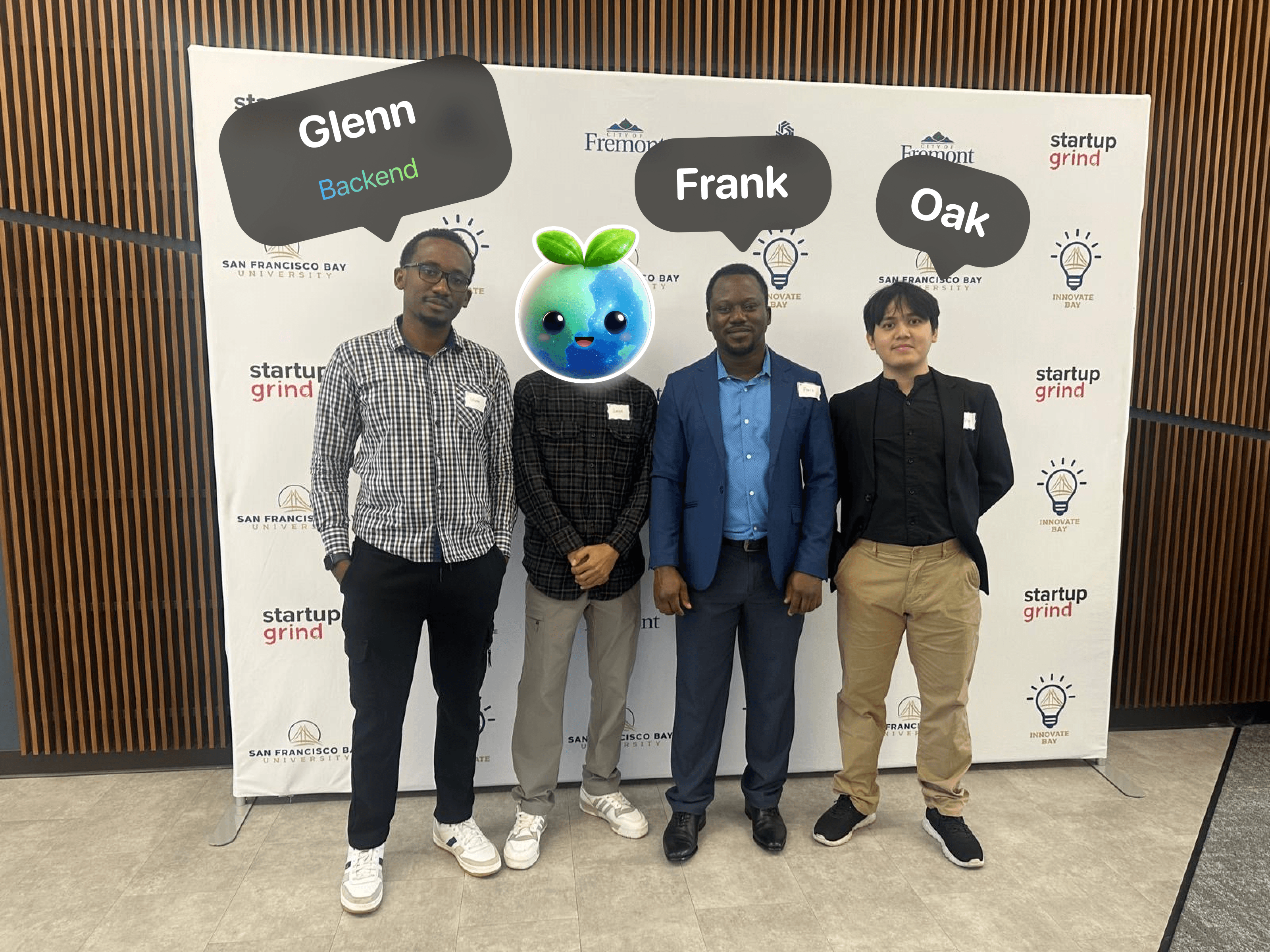

Where the idea came from: we had four people and about four months (Sep-Dec 2024) to build something for our capstone. We brainstormed a lot of directions before landing here. One early concept was an event scheduling app like Luma but for multicultural events, personalized to different ethnicities. We explored several other ideas too, trying to find something that solved a real problem instead of just sounding impressive. Eventually we agreed on tackling the waste problem, starting from California and making it scalable.

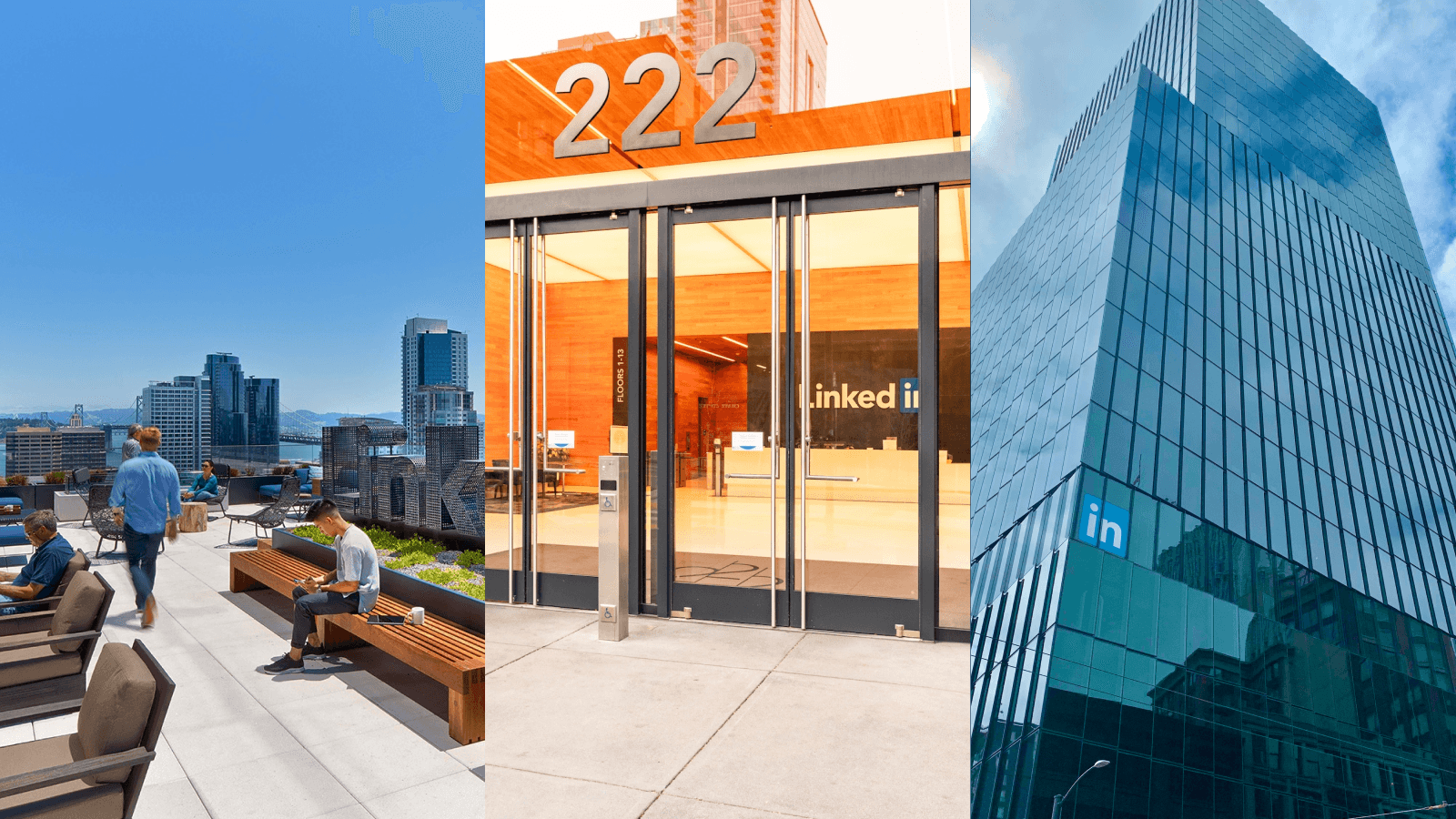

The B2B angle came later. I was at a career fair on top of the LinkedIn building, talking with people from Google and some startups. They mentioned that institutions were already using recycling analytics, and they all had the same complaints about the tools. That's what shaped our focus on businesses and institutions instead of trying to guilt individual consumers into caring more.

How I approached it

Switching to Claude Code mid-project

I'd been using Cursor. Switched to Claude Code about a month in because developers kept recommending it.

Here's what most people didn't expect: vibe coding is easy at first. Fun, even. But the further you get into a project, the harder it becomes to iterate in the direction you actually want. I kept getting suggestions that were close but not quite right.

After a lot of wasted weeks, I figured out that context engineering matters more than prompt engineering. The perfect question doesn't help if the AI doesn't have the right context to work with.

What works for me now: I do detailed logging throughout the codebase, then filter down to the specific lines that matter for whatever I'm working on. The AI gets precision instead of noise. Hallucinations basically stopped.

The downside: learning new workflows when you're already in the middle of a project is annoying. But the improvement in iteration quality made it worth the disruption.

CapacitorJS for native cross-platform

The app is built with Next.js and React, so it's fundamentally web-based. But the nature of what we're building, scanning waste items on the go, means people need to use it like a mobile app. Nobody's going to open a browser tab while standing in front of a trash bin.

We had three options: React Native, CapacitorJS, or native SDKs for each platform. We went with Capacitor. With a 3-month timeline and four people, we couldn't afford to maintain separate iOS and Android apps plus a web version. Capacitor let us wrap our Next.js app and ship everywhere from one codebase.

It worked on phones in the end. But getting there was painful. Cache behavior is different across operating systems in ways that aren't documented anywhere useful. Things that ran fine in the browser broke on actual phones. We got it working, but the constant debugging and fixing every time something broke wasn't worth the effort.

My honest take: if you're in a rush and need to ship everywhere, Capacitor can work. But native is better. React Native or actual native SDKs will save you headaches in the long run.

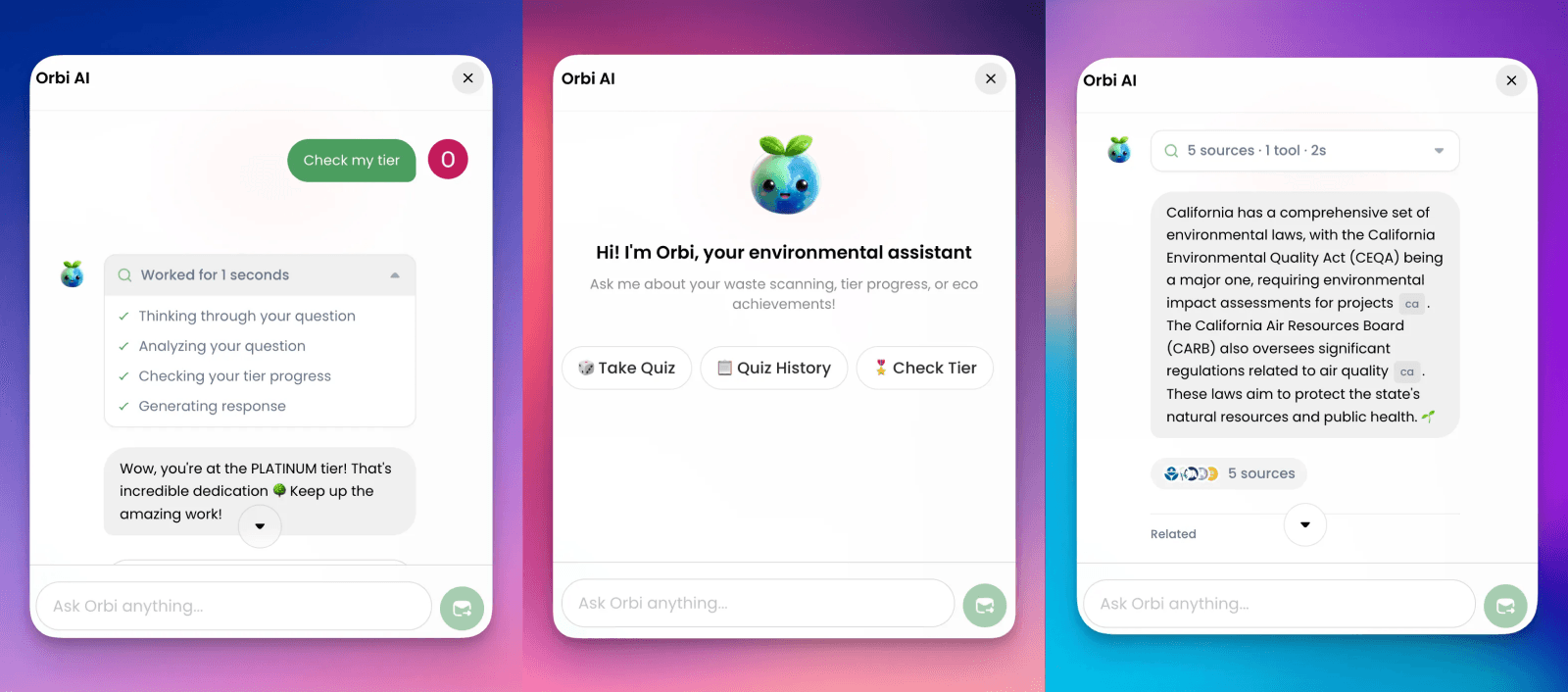

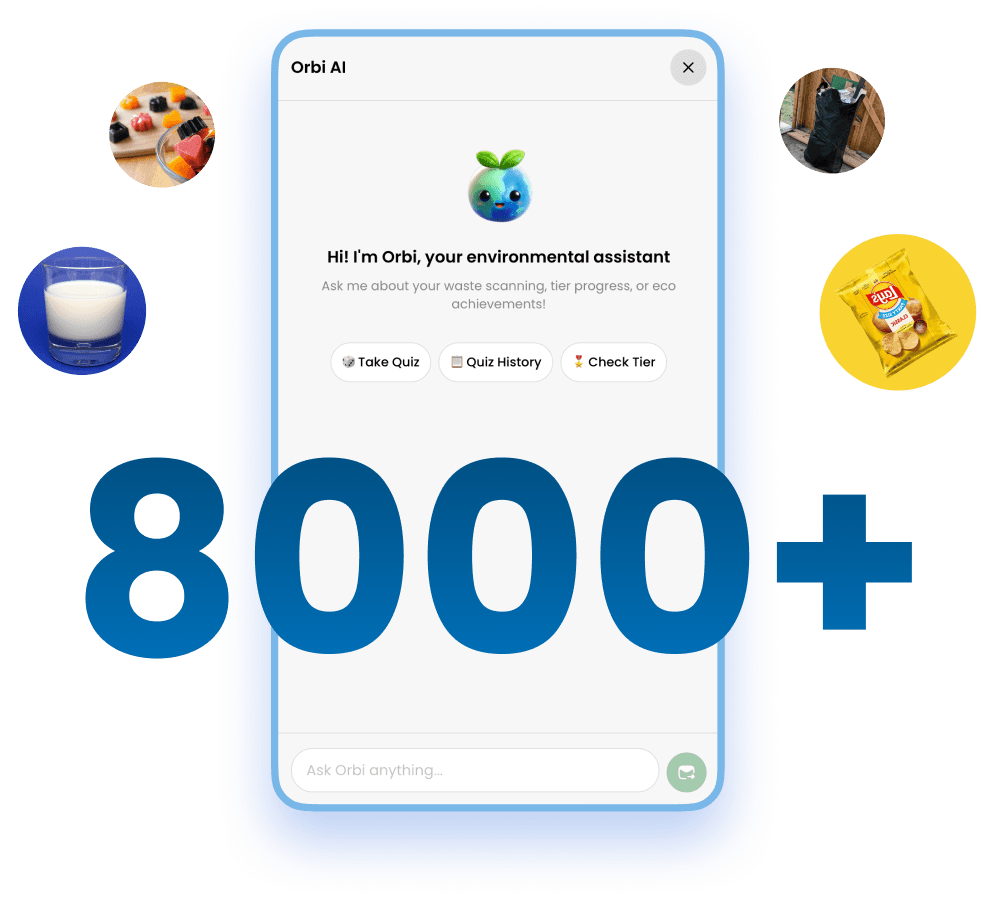

Building Orbi AI

The easy path would've been embedding a ChatGPT interface with some recycling prompts. We didn't want that. We wanted something that actually knew waste classification and could explain its reasoning.

I built the Orbi AI using Vercel AI SDK v5. Shadcn doesn't have AI chat components, but luckily I found Vercel AI Elements. I started with those building blocks and reskinned them for our brand.

What Orbi does:

Tool calling that renders different UI based on what you're asking about

Shows its reasoning ("Compost. Remove the plastic lid first.")

Searches and cites sources with expandable modals

Generates quizzes from the conversation to help stuff stick

This took way more engineering than dropping in a widget. But it's the reason the app doesn't feel like every other ChatGPT wrapper out there.

Motion animations

I almost skipped animations to ship faster. Glad I didn't.

The standard for UI quality in 2025 is higher than it was even a year ago. Basic interfaces look dated immediately. I bought Motion Plus and invested time learning it.

Took longer than expected. But when you scan something and the result slides in with a subtle spring, or achievements pop with actual weight, it changes how the app feels. People notice polish even if they can't articulate why.

Fighting AI slop

Backend came together fast now with AI. Frontend design was the hard part.

If you're keeping up with the discourse, you know "AI slop" became the most popular term in 2025 for a reason. There aren't many good resources for AI-assisted frontend design that doesn't look generic. I tried a lot of tools: Bolt, Lovable, Figma MCP, v0. Most of them spit out code that worked but looked like every other AI-generated app.

What finally clicked was Figma Make.

Here's the workflow I developed: I design in Figma, then use Figma Make's Github connector and click inspector to extract the component structure. Instead of piping that directly through Figma MCP (which loses reasoning context), I create a detailed markdown file with the exact design specs and feed that to Claude Code. The AI gets the precision it needs to match the actual design, not approximate it.

Takes more steps than "just use the MCP." But the output matches what I designed instead of what the model thinks a component should look like.

The goal was a design that felt responsive and polished, not obviously AI-generated. We wanted something closer to liquid glass aesthetics (without liquid glass), not the flat, barebone look that screams "I just vibecoded and didn't go deeper"

On the ML side, the LEAF Engine isn't static. We set up AWS and Supabase so it auto-retrains on new waste images. The model gets better over time without manual intervention. That's the difference between a demo and something that could actually run in production.

How it came together

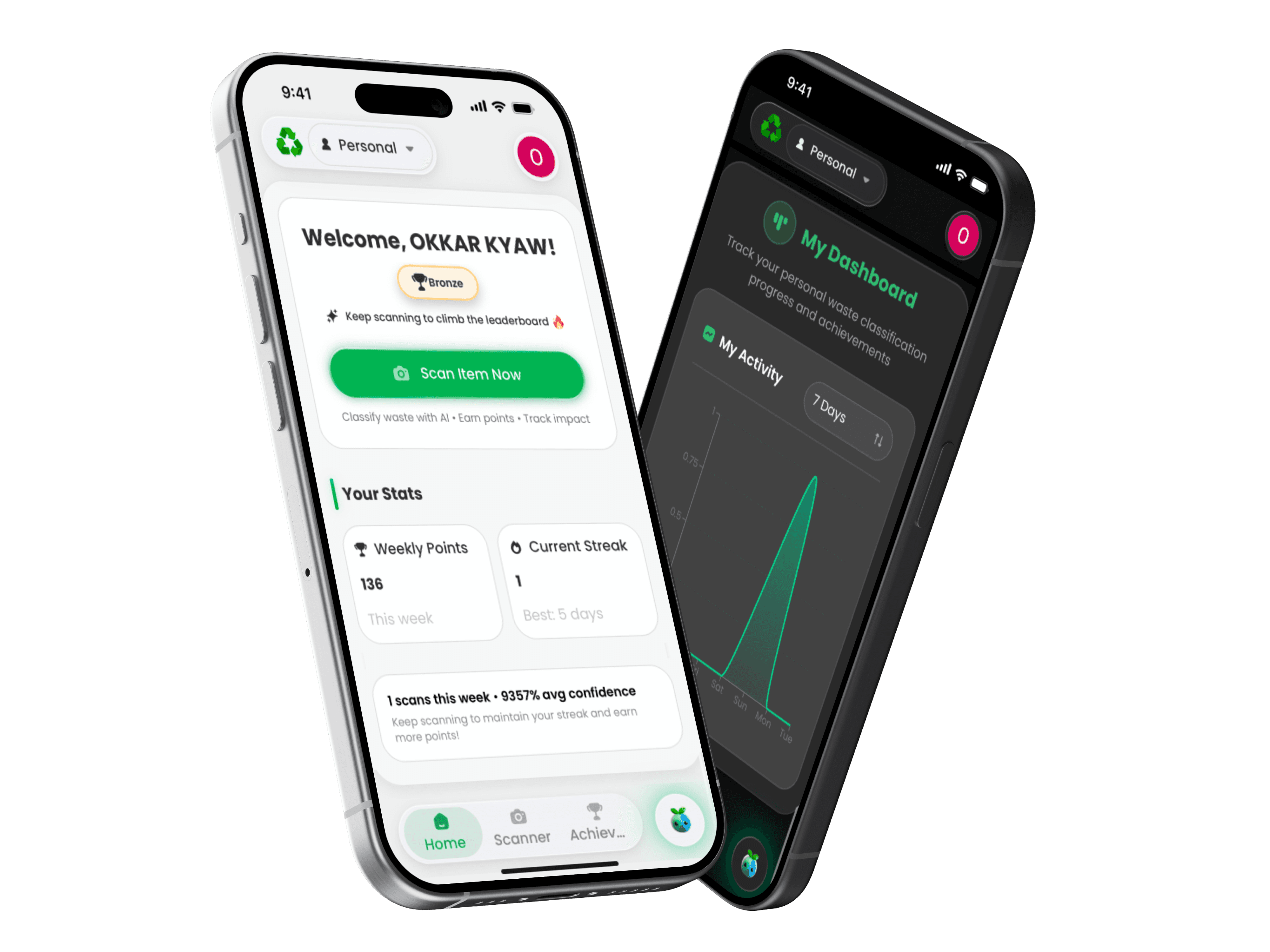

V1: it worked, but nobody cared

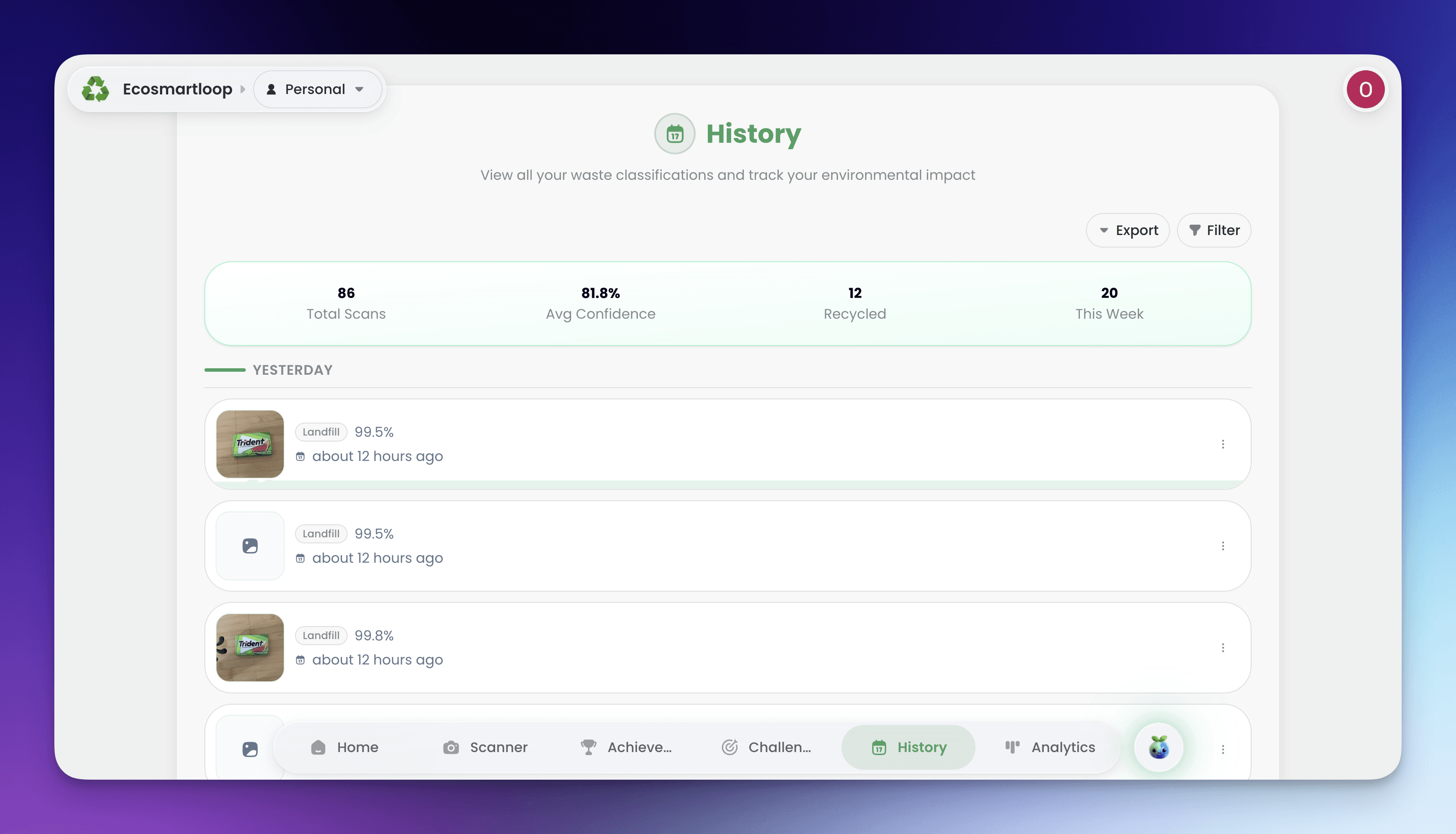

First version had the scanner, a dashboard, basic analytics. Technically functional. You could scan waste and get the right answer.

Problem: there was no reason to keep using it. Green guilt only motivates people for so long.

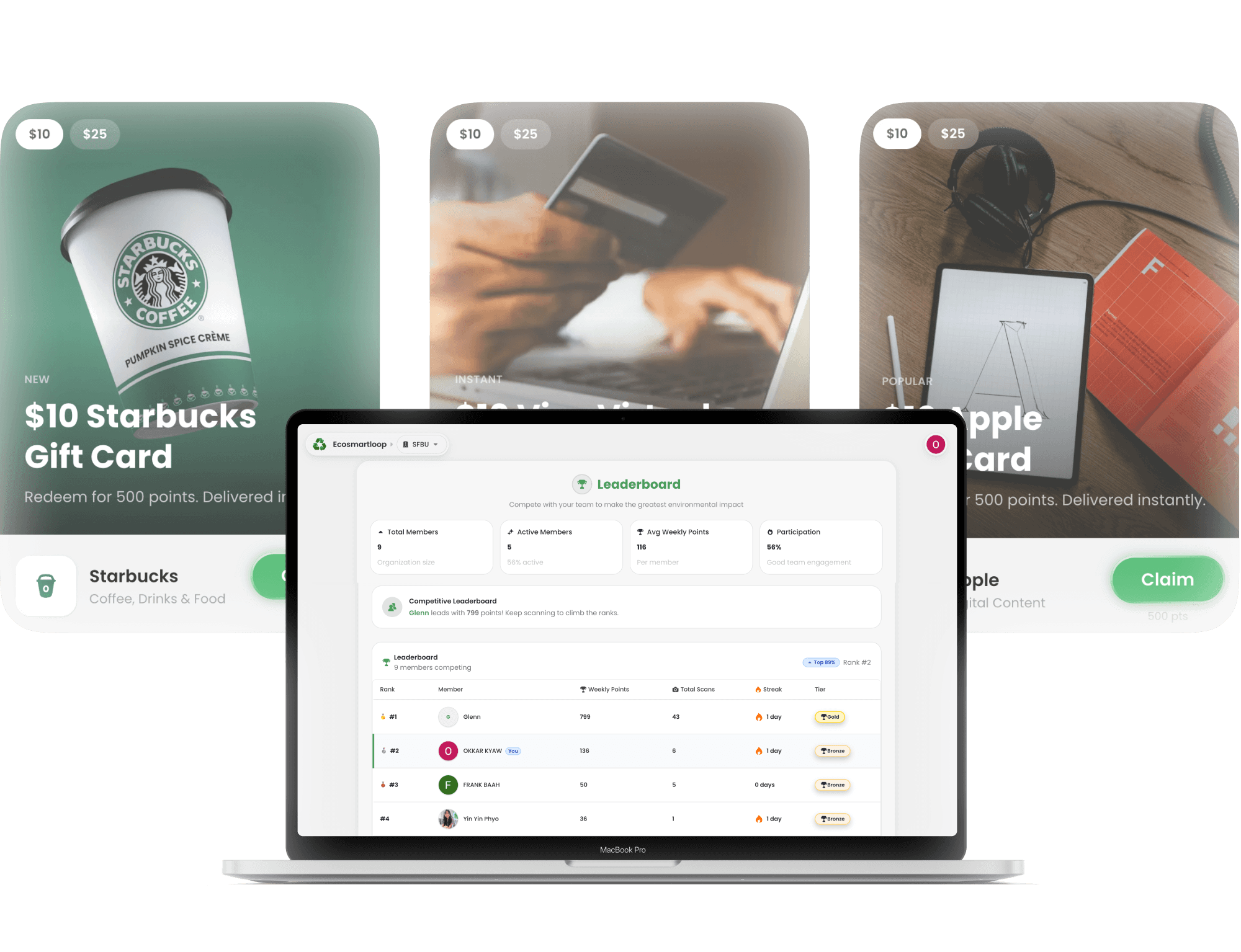

V2: gamification that worked

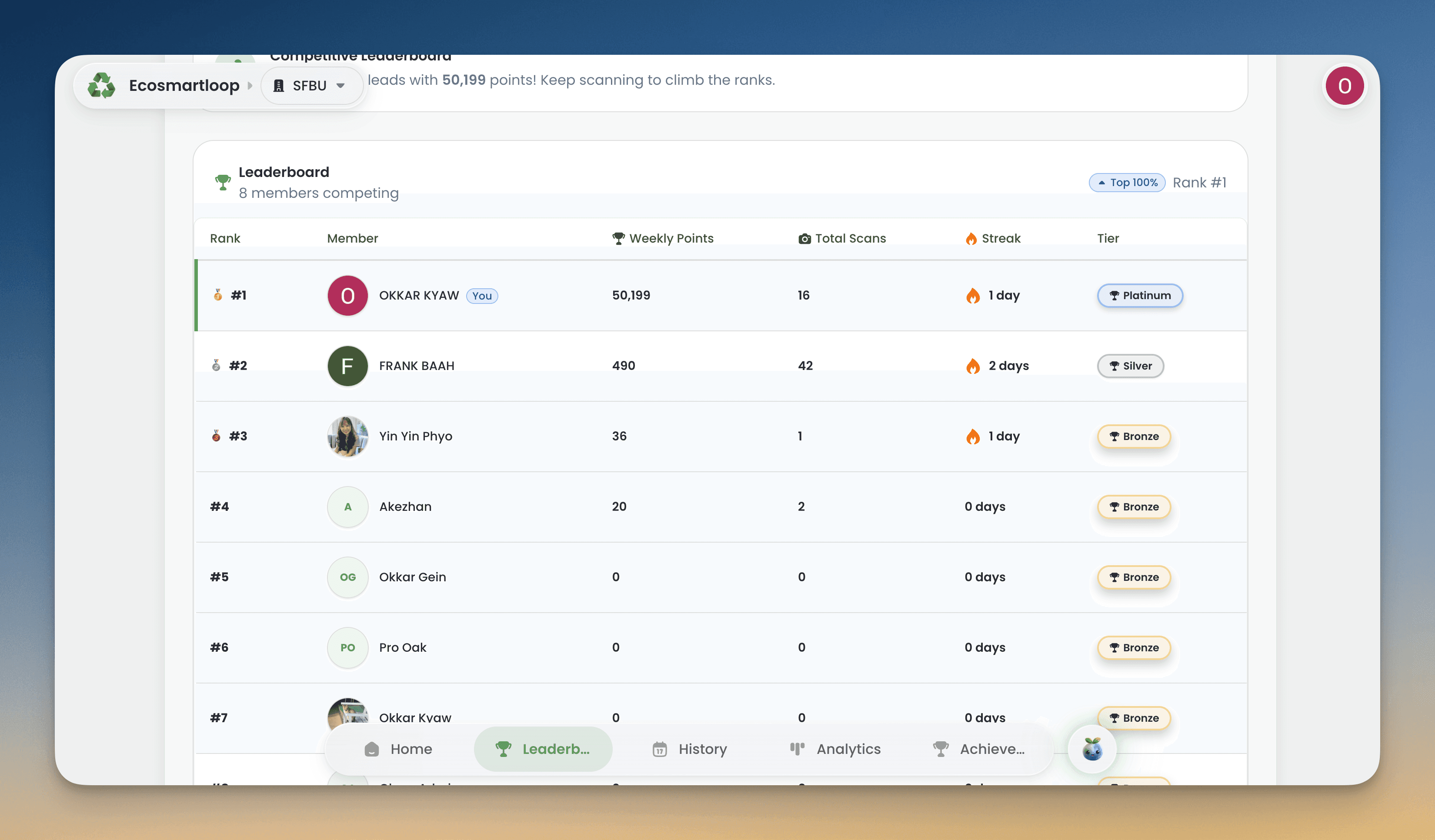

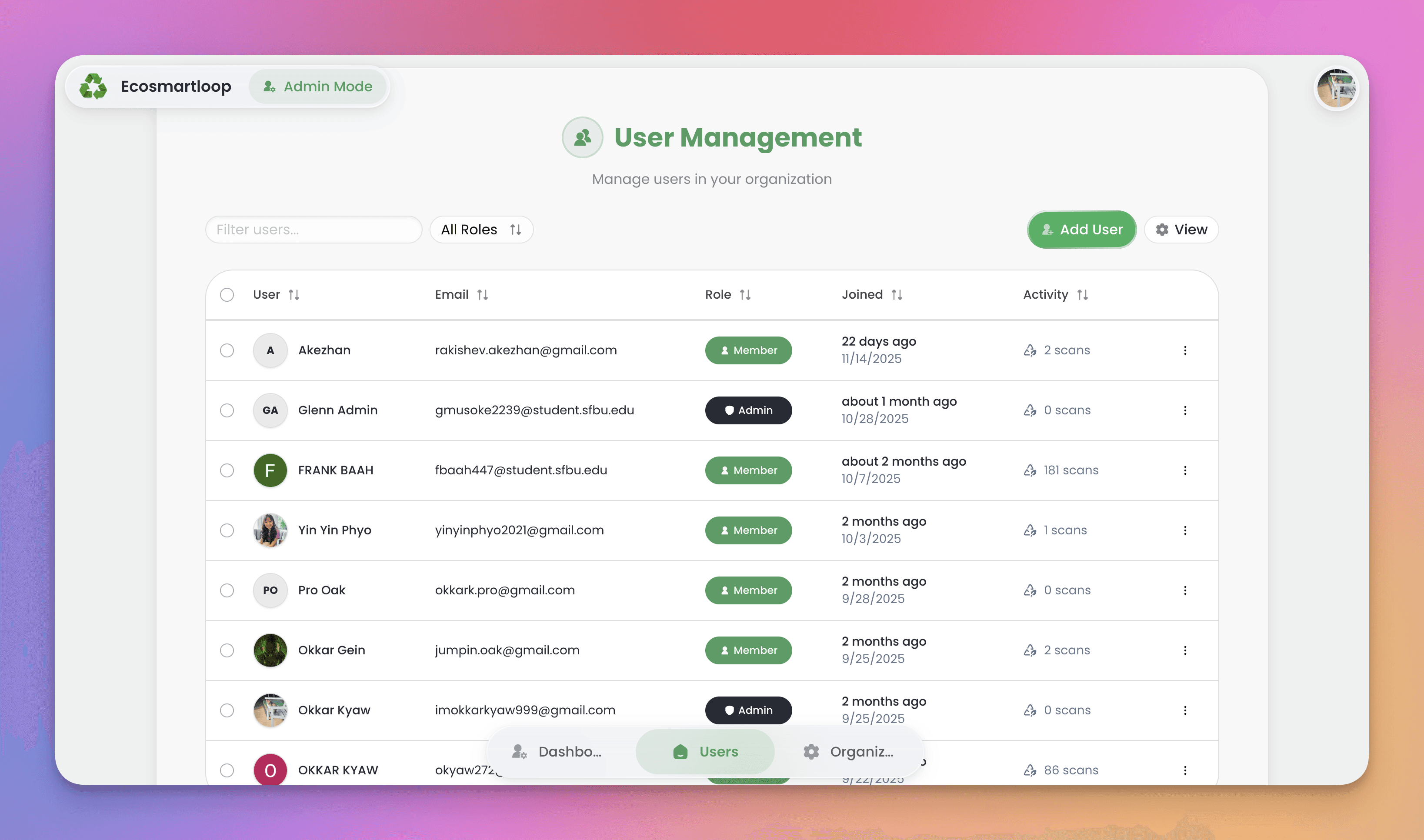

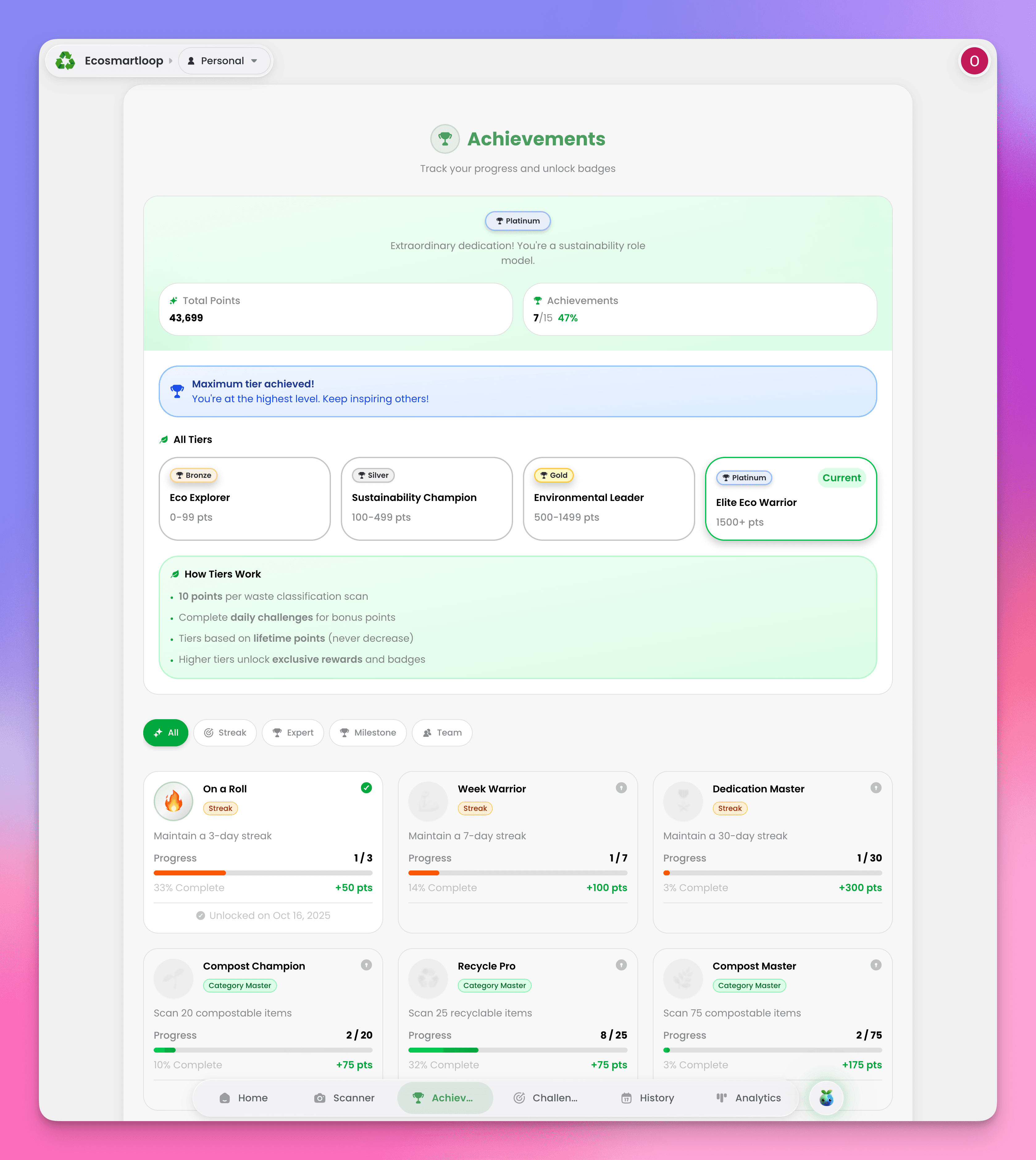

We added gift card rewards through Tremendous (2,000+ brand options), an achievement system with tiers, and leaderboards where departments could compete against each other.

Problems we hit

What went wrong | What we did |

|---|---|

LEAF Engine accuracy was inconsistent | Frank and Glenn trained it on 8,000+ real waste images and set up auto-retraining |

Caching broke on mobile | LocalStorage with OS-specific invalidation logic, tested on real devices early instead of simulators |

AI responses felt generic | Tool calling plus heavy system prompt tuning for domain expertise |

UI felt flat | Full Liquid Glass inspired Redesign + Motion animations on scan results, achievements, leaderboard updates |

No AI chat components in Shadcn | Built from Vercel AI SDK components, reskinned for our brand |

What changed for me

Before this project, I used Cursor and that was about it.

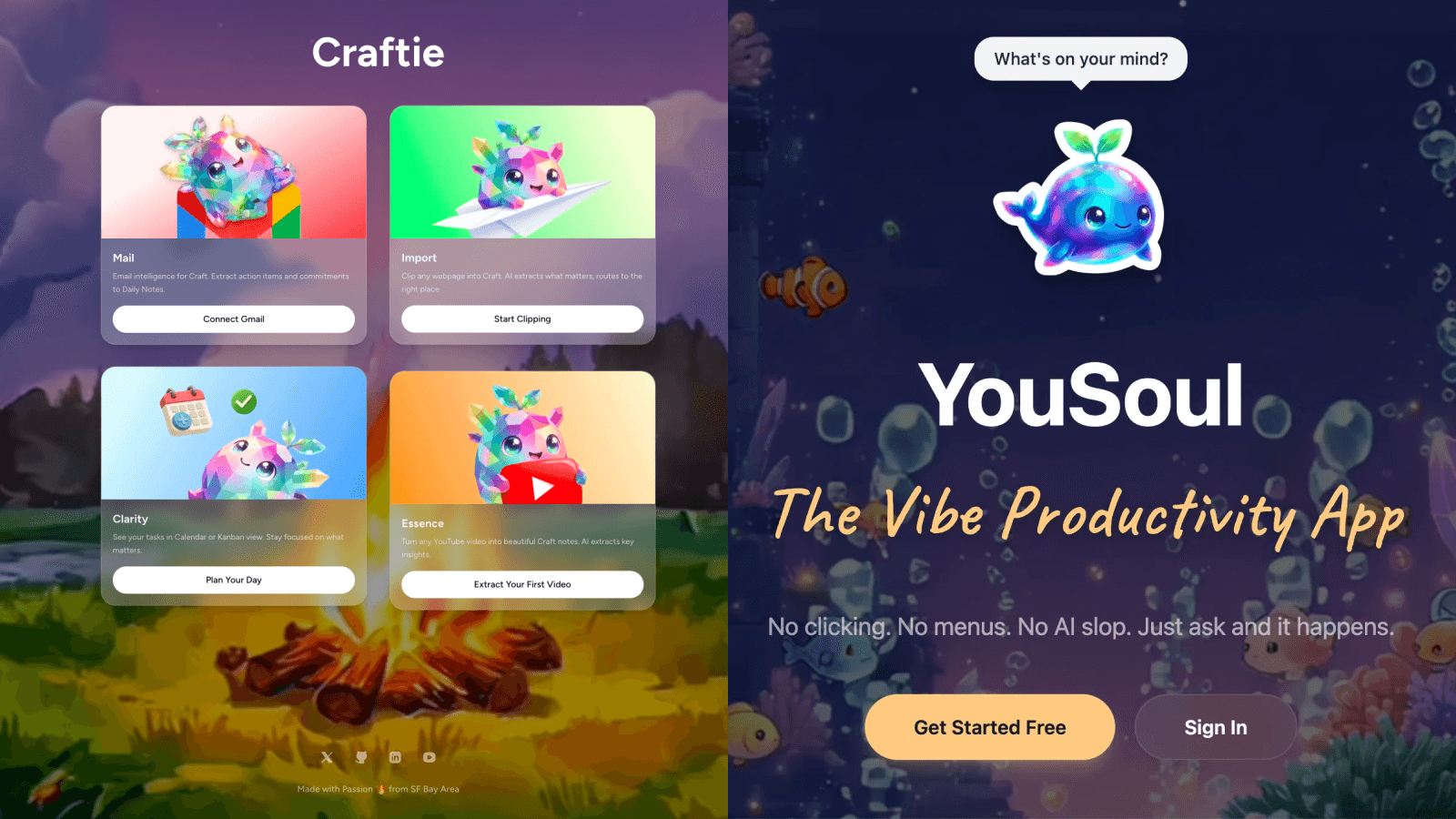

Now I use Claude Code with proper context engineering. The difference showed up fast: I built Craftie (4 micro AI tools for 1M+ Craft Docs users) and YouSoul (a productivity system) in about 3 weeks after finishing EcoSmartLoop. Compare that to 3-4 months with a team for this project. The patterns compound.

What I'd do differently

CONSUME MORE STUDIES ON AI ENGINEERING.

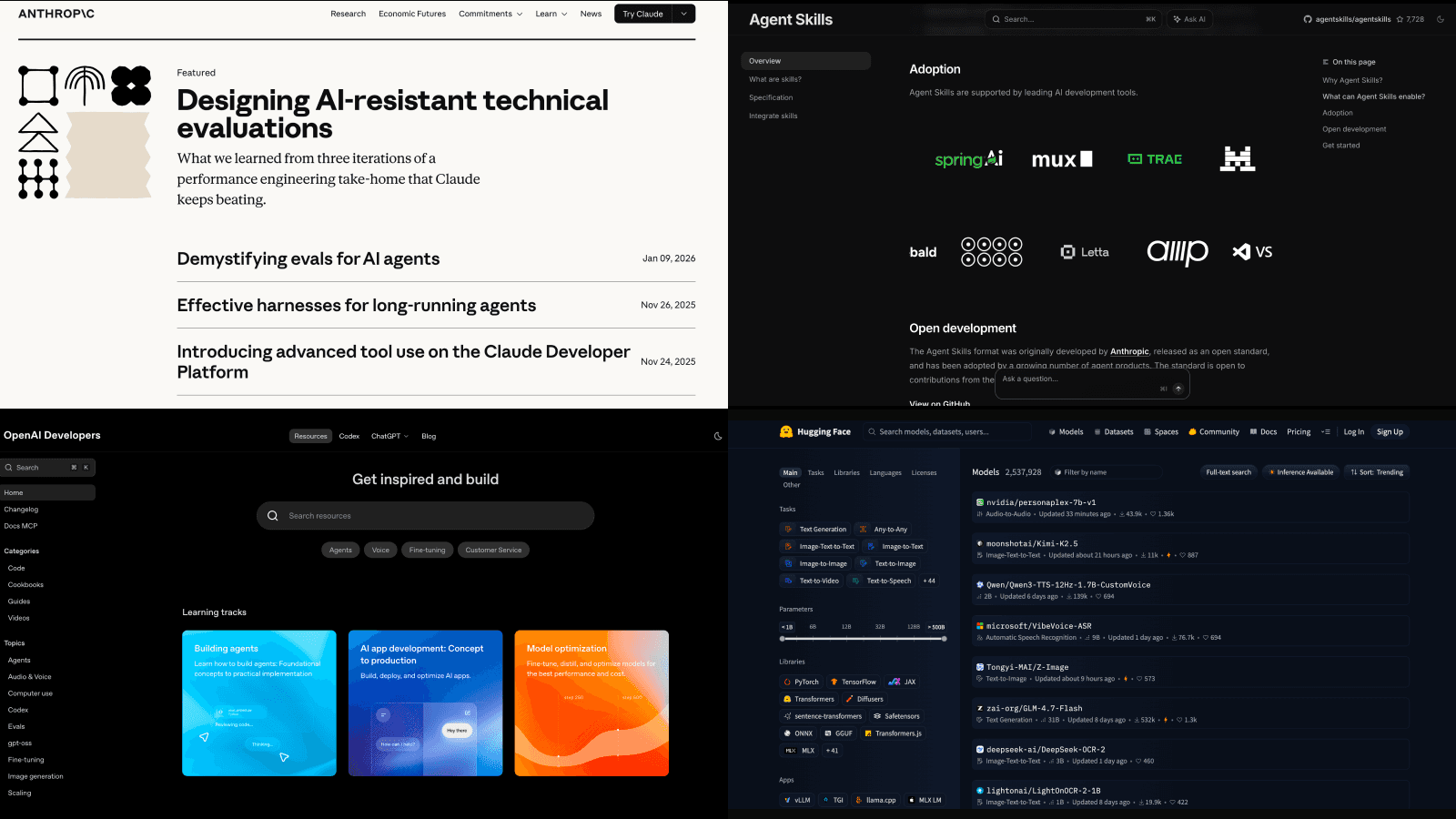

Context engineering over prompt engineering. This is the main thing. Once I started giving AI specific context instead of generic prompts, the quality of suggestions changed completely. And I would use Agent Skills even more.

Test on actual devices early. What works in the browser simulator breaks on real iOS in ways you won't predict. We lost time to this.

If I had more resources, I'd use React Native or Swift/Kotlin instead of Capacitor. Capacitor is fast to ship but the platform-specific bugs ate into time we could've spent on features. Speed versus robustness tradeoff.

Why we're not making this a startup

We talked about it. The foundation is there: multi-tenant architecture, AI that actually works, gamification that got people to come back. California first, then scale nationwide. I even did an investor pitch practice for my startup course in my master's final term.

We decided to focus on career growth first.

We're early in our careers. We want more experience, want to build more, contribute more, before taking on something that demanding. Maybe later.

If someone wants to take this forward, reach out. We're open to collaborating, open-sourcing (once we have time to make the code actually presentable and usable), or just talking through what we learned.

My Tech stack

Frontend: Next.js 15, TypeScript, Vercel AI SDK v5, Motion Plus

Backend: Supabase, Prisma ORM, BetterAuth, Python ML

Mobile: CapacitorJS, Tremendous Giftcard API

Dev kit: Claude Code Max (mainly), GLM 4.7 (lightweight), MCPs, Agent Skills, Hooks, Figma Design, Figma Make

Check it out: Live demo | GitHub | Pitch Presentation

Highlights